LLamaindex and Langchain Integration

In this blog article, we will create a ChatBot that seamlessly integrates with LLamaIndex and Langchain. LLamaIndex and Langchain are robust libraries specifically designed for implementing LLMs, making them ideal for various language processing applications.

Importance of Large Language Models (LLMs)

LLMs have emerged as a groundbreaking advancement in the field of NLP and have revolutionized the way we interact with AI. These models possess an unprecedented capacity to understand and generate human-like text. Thus enabling them to perform a wide range of language-based tasks.

One key importance of LLMs is their ability to generate highly coherent and contextually relevant text. These models are trained on vast data allowing them to learn the intricacies of language patterns, grammar, and semantics. As a result, they can generate responses, write articles, compose poetry, and even engage in meaningful conversations with users. This breakthrough has significant implications across multiple domains, including customer service, content creation, and virtual assistance.

Why use LLamaIndex and Langchain?

LLamaIndex and LangChain are two powerful frameworks that offer significant advantages for developers working with language models.

LLamaIndex serves as a comprehensive data framework, specifically designed to augment language models with private data. Its data connectors enable seamless integration of diverse data sources, such as APIs, PDFs, and SQL databases, while its advanced retrieval and query interface empowers developers to retrieve contextually relevant information. By combining LLamaIndex with LangChain, developers gain access to a modular framework that simplifies the development of language model applications.

LangChain’s components and off-the-shelf chains provide abstractions and pre-built solutions, making it easy to customize existing chains or build new ones tailored to specific use cases. Additionally, LangChain’s agentic nature allows language models to actively interact with their environment, leading to dynamic and engaging experiences. Together, LLamaIndex and LangChain offer a powerful toolkit for enhancing language models, enabling developers to create data-aware, interactive, and highly customizable applications.

Step-by-Step guide to integrate LLamaIndex and Langchain

Import the required libraries

Before you proceed with implementation, make sure you have installed the required libraries.

!pip install openai langchain llama-index

import logging

import sys

import os

logging.basicConfig(stream=sys.stdout, level=logging.INFO)

logging.getLogger().addHandler(logging.StreamHandler(stream=sys.stdout))

import openai

from langchain.agents import Tool

from langchain.chains.conversation.memory import ConversationBufferMemory

from langchain.chat_models import ChatOpenAI

from langchain.agents import initialize_agent

from llama_index import VectorStoreIndex, SimpleDirectoryReaderSave OpenAI API Keys

Assign OpenAI API keys securely configuring and managing the API key for seamless integration and access to the OpenAI services within language model applications.

os.environ["OPENAI_API_KEY"]='<your-api-key>'

openai.api_key='<your-api-key>'Load the Data

The OpenAI API key is the key part to proceed with the further execution of the code. Now add a context as a txt file inside data directory. The first step is to load in data. This data is represented in the form of Document objects. One can provide a variety of data loaders which will load in Documents through the load_data function.

Now build an index over these Document objects. The simplest high-level abstraction is to load-in the Document objects during index initialization. Each vector store index class is a combination of a base vector store index class and a vector store

documents = SimpleDirectoryReader('data').load_data()

index = VectorStoreIndex.from_documents(documents=documents)Configure Tools

Langchain requires a tool to initialise an agent. This tool is where LLamaIndex is configured to query the search index results.

tools = [

Tool(

name = "LlamaIndex",

func=lambda q: str(index.as_query_engine().query(q)),

description="I am Q&A ChatBot",

return_direct=True

),

]Integrate LLamaIndex and Langchain

To understand in simple words, Langchain is mainly used to build a ChatGPT like application over a custom data. Now that the custom data is already loaded as document, the further steps is build a LLM. If you have used ChatGPT or any other LLM, you are familiar that ChatGPT can save the memory of the chat. In the same way ConversationBufferMemory is a method to add memory to an LLMChain.

Chains allow you to combine multiple components together to create a single application. For example, you can create a chain that takes user input, formats it with a PromptTemplate, and then passes the formatted response to an LLM.

Lastly we have an agent. An agent has access to a suite of tools, and determines which ones to use depending on the user input. Once the memory and chain is set, agent uses tools and performs the Q&A based on the user input. The way the agent performs is simple:

- Read the user input prompt

- Decides which tool to use. In our case LLamaIndex query engine.

- Decides how to response based on history of tools.

memory = ConversationBufferMemory(memory_key="chat_history")

llm = ChatOpenAI(temperature =0)

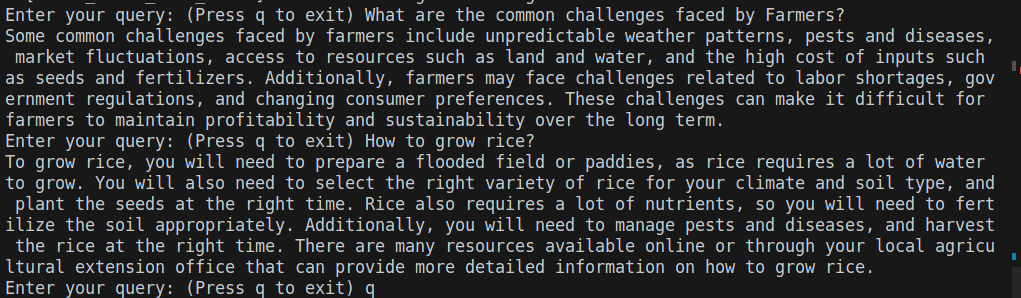

agent_executor = initialize_agent(tools, llm, agent="conversational-react-description", memory=memory)Run the Agent

Now as the prompt and let agent run and give you the response.

while True:

prompt = input("Enter your query: (Press q to exit) ")

if prompt.lower().startswith('q'):

break

info = agent_executor.run(input=prompt)

print(info)Output:

Reference